Roger Chen

In this project, our task is to take a scanned image containing 3 grayscale images, which represent the world as seen through blue, green, and red filters, and align them into a RGB color image. I chose to use Python and Numpy for this task, because it is what I am most familiar with.

There are a lot of blotches and defects in the input images that make it hard to make sense of. I ended up just taking three equal sized slices of the input image array, by dividing the height of the original image in 3, and making those the three channels.

I noticed that when I loaded the images into Python with the Pillow image library, the small

JPEG images would be arrays of type np.uint8 and have range 0 to 255, whereas the

large TIFF images would be arrays of type np.uint16 and have range 0 to 65535. I

want to use floating point numbers instead of integers, so the first thing I did was to

normalize the values to approximately 0 to 256, so I do not have to worry about numerical

issues regarding integer overflow.

Before I started doing any alignment, I wanted to write the output function so I could save the

final output files and visualize my work. My output function creates a H*W*3 array of type

np.uint8 and fills it in with 3 numpy arrays that represent the three color

channels. I noticed that the input images seemed to be in Blue-Green-Red order, whereas numpy

wants the arrays in Red-Green-Blue order, so I saved each channel to the

2-Nth channel of the numpy array. I output the final images as PNG

instead of JPEG, because I do not think I should be using a lossless file format if I'm hunting

for alignment errors in the output images. I can always convert the PNG to JPEG if I want to

publish the results.

The first version of my code tried to align all 3 images at the same time, by searching over two pairs of displacements: one for green and one for red. There were a total of 4 parameters to match. I thought that, even if the luminosity of two different color channels might have a lot of differences, I could get a pretty good answer by using the luminosity of all 3 color channels at once. I noticed my code ran really slowly and this was not the suggested method on the project spec, so I tried something else.

For my error metric, I used the L2 norm of the difference of the two images. I did not normalize

this error metric, because I would only be comparing images that were all the same size. This is

because I designed my get_displaced() function to also take in an extra parameter

that would guarantee that, in one iteration of the algorithm, all of the compared images would

be the same size.

Finally, I added the image pyramid optimization to my algorithm. I set a recursion base case threshold at 100 pixels, so images that were less than 100 pixels on the longest side would not be resized any smaller. On each recursive call, I reduced the size of my input images by a factor of 2. When the recursive call returned, I scaled up the estimated alignment parameters by a factor of 2.

This initial version of the algorithm produced this image, which is pretty good at a first glance:

However, the 100% crop reveals that there is a lot of odd colors on the edges:

Plus, some of the example input images did not align at all:

Here are all of the results produced by this initial algorithm. The parameters are expressed

as (dy, dx).

We see imperfections in the alignment of most images, but only one image (emir) is seriously misaligned. This misalignment will be addressed in the next section.

I used some extra techniques to improve the performance of my algorithm on the images that it fails to align.

My first idea was to expand the search space from a displacement pair (x, y) to an 8-tuple of parameters (x1, y1, x2, y2, x3, y3, x4, y4) that represented the 4 corners of a rectangular image. With the 4 corners, I would use a linear perspective transformation matrix to align the green channel to the blue channel, and another transformation matrix to align the red channel to the blue channel.

The main problem with this approach is that it would be too slow to implement, if I just added on the extra parameters to my current code. So, I came up with two modifications to this plan: #1) I would use 2 corners (top-left and bottom-right) instead of 4, which contains almost the same expressive power, but fewer dimensions, and #2) I would try to optimize one parameter at a time, while holding the others fixed.

My algorithm tweaks each parameter one at a time, and finds the tweak that yields the lowest error. Then, it updates the best guess for the alignment parameters with these new values. On each level of the image pyramid, this tweak-update cycle is performed 8 times.

So, my search space became (x1, y1, x2, y2). I represented this internally in my code as (y1, x1, y2, x2), because it makes more sense with Python's array indexing.

I used a function called sobel(), which was part of the scikit-image filters

module. It produces an outline of the image that correspond to the edges. However, the edges

appear black-er when they are less defined edges, and white-er when they are more defined. This

is nicer than binary True/False edges, because edges in different color channels might not

always show up the same way.

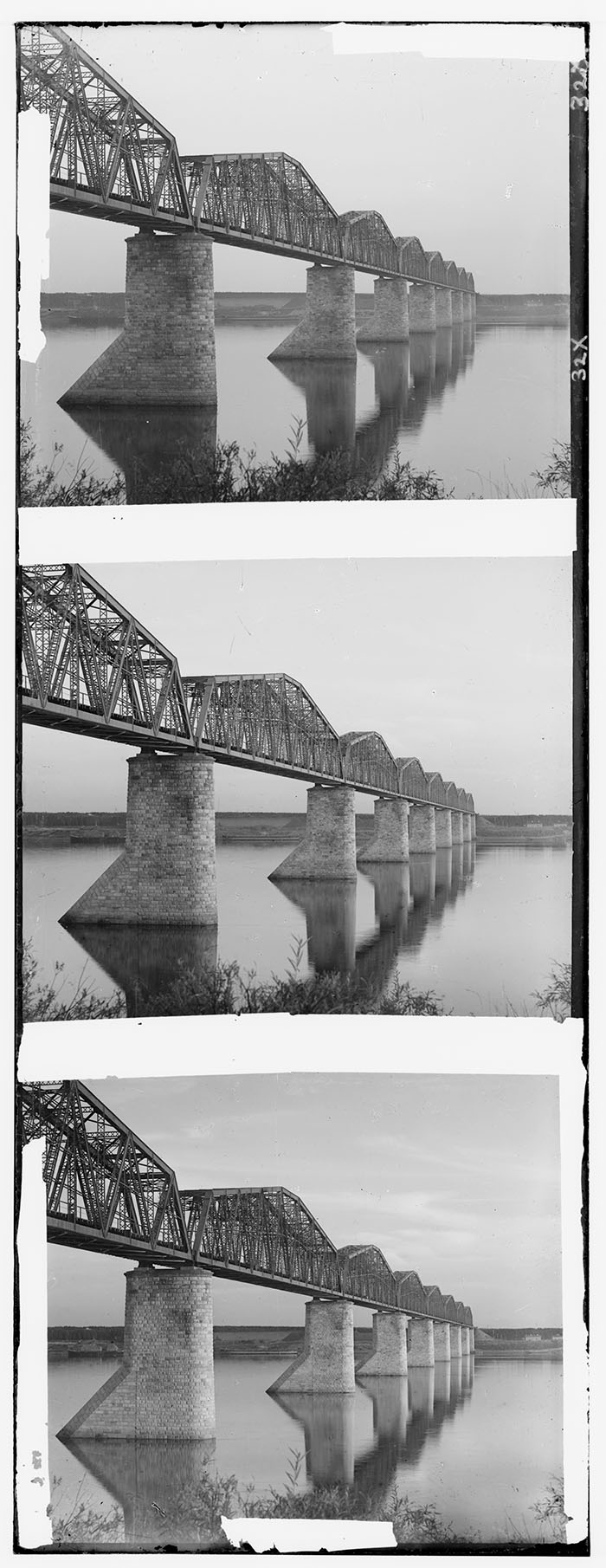

One reason I wanted to implement the additional parameters (x1, y1, x2, y2) was to remove the color fringes in the bridge photo. The effect is especially notieable at the edges of the image, so I have chosen to examine those pink and yellow fringes. Here is a full preview and a 100% crop of the original algorithm's result:

I improved the result by enabling edge detection and the extra scaling parameters. Here is the same full preview and same 100% crop of the improved algorithm's result:

Not bad!

The combination of edge detection and scaled parameters seems to have fixed the misaligned emir.tif input as well. Here is the result of the original algorithm:

And here is the result of the improved algorithm:

Strangely, turning off either scaling or edge detection causes the image to be misaligned. The image is only properly aligned when both features are enabled together.

Here are all of the results produced by the final version of the algorithm. The parameters are

expressed as (dy1, dx1, dy2, dx2).

Woah! It looks like there are a lot more misaligned images than there were even the first attempt algorithm. However, I noticed that if I turn off either edge detection or scaling, I can make the misalignment disappear.

Just for fun, here are some other images from the Prokudin-Gorskii collection. They were all aligned properly with both edge detection and scaling turned on.

00027a

(0, 0, 3208, 3723),

(-17, -46, 3180, 3667),

(-38, -108, 3162, 3608)

00476a

(0, 0, 3241, 3751),

(1, 22, 3228, 3757),

(4, -23, 3237, 3708)

00488a

(0, 0, 3248, 3770),

(-22, -45, 3211, 3749),

(-34, -109, 3206, 3709)